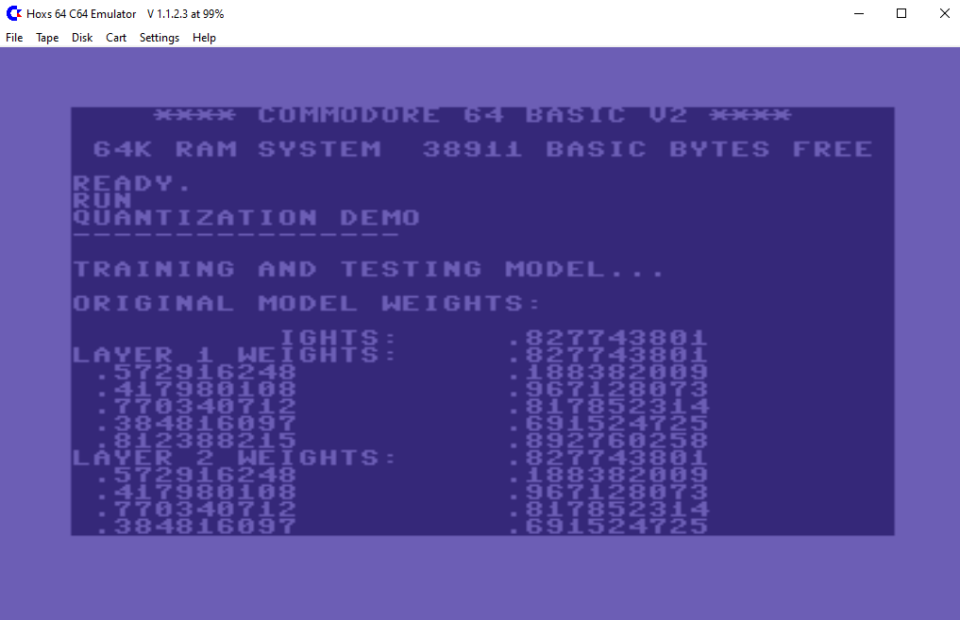

Quick Recap: Quantization is a technique used in machine learning to reduce the precision of weight values in a model, which can result in significant reductions in memory usage and computations required.

Program Description:

The program, written in BASIC v2, starts by defining a neural network with three layers and ten weights per layer. It then trains the model using a random dataset, demonstrating how to implement the forward pass and backward pass algorithms. After training, the program shows how to quantize the model weights using a mean-based quantization method. This method reduces the number of bits used to represent the weights while preserving the model’s accuracy. Finally, the program demonstrates how to use the quantized weights for inference or further optimization.

Features and Functionality:

- Implementation of a simple neural network with three layers and ten weights per layer

- Train the model using a random dataset

- Demonstration of forward pass and backward pass algorithms

- Quantization of model weights using a mean-based quantization method

- Preservation of model accuracy after quantization

- Use of quantized weights for inference or further optimization

Target Audience:

This program targeted for the c64 platform is suitable for those interested in exploring quantization techniques in machine learning, particularly for educational purposes. It provides a basic understanding of how quantization works and how it can be applied to a simple neural network. However, it’s important to note that the program doesn’t include any actual data or real-world examples, so its practical applications may be limited.

Links(source included in .txt, please rename in .bas for the compiler)